Capstone: The Journey Thus Far

The Project

My fourth-year Capstone Engineering Design Project at the University of Waterloo is a mobile robot that can automate the inspection of the boiler rooms in nuclear power plants. The project is currently under development by me and a team of four other undergraduate students.

The ultimate aim of the project is to reduce the exposure of workers to the radioactive conditions present in a nuclear boiler room. In Canada, the radiation exposure of nuclear energy workers is strictly regulated by the Canadian Nuclear Safety Commission. As such, reducing worker exposure will also reduce the number of workers required, since each is only allowed a certain maximum radiation dose.

There are a number of functions that the robot should be able to perform. The most interesting and most difficult of these is the ability to automatically measure the thickness of pipes to check for deterioration. In nuclear power plants today, this task is performed manually by a worker with a handheld ultrasonic sensor. Using an automated system has the potential to increase precision and repeatability of measurement. In addition, the robot should also perform passive measurements of environmental conditions like temperature, noise level, and radiation. Finally, the robot must be equipped with a robust safety system. For example, the robot should stop moving when any unexpected obstacle enters its workspace. It also requires remote and onboard emergency stops.

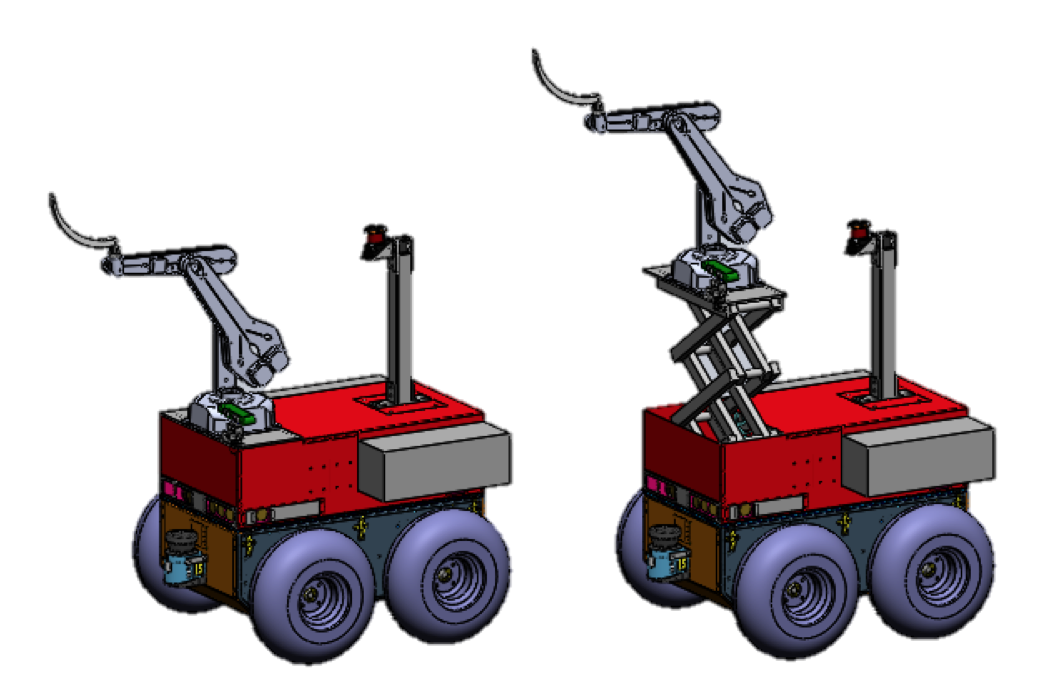

The design we’ve decided to build consists of a four-wheel mobile platform mounted with a six degree-of-freedom robot arm. The ultrasonic sensor for measuring pipe thickness is attached to the arm’s end effector. It was decided that the mobile base will be teleoperated by a worker outside of the boiler room, since we would not have time to implement full autonomy. However, the process of measuring the thickness of pipes is to be fully autonomous. A conceptual CAD model of the design is shown below.

My Work

I focus almost exclusively on various software aspects of the system. My first task was to work with our robotic arm, the ST Robotics R12. We wanted a Linux-compatible interface so we could use ROS with the arm, but naturally it only ships with a Windows client. Luckily, the client does not actually do any command parsing or processing. The arm accepts commands in ROBOFORTH, a proprietary language written by ST Robotics and based on Forth. The commands are processed by an onboard microcontroller, which then sends the signals to a separate Digital Signal Processor that actually drives the motors.

Since there was no mysterious processing being done by the client, I was able

to program a simple serial interface in Python to abstract writing to and

reading from the arm. I also created an interactive shell that can be used to

interact with the arm, as a replacement for the Windows client. Both are

packaged in the r12 pip package, the source for which can be found

here.

My next large area of focus was developing a preliminary vision system to

identify locations on pipes to measure and then commanding the arm to move

accordingly. Built atop the r12 package for interfacing with the arm, I wrote

a program to process the colour and depth feeds from our

Xtion PRO LIVE and translate

them to the robot arm’s coordinate frame. I used OpenCV to detect the location

of coloured markings on pipes, which, when combined with information from the

depth feed, yields a point in 3D space. It is then reasonably straightforward

to transform the point from the camera’s reference frame to the robot arm’s, as

the camera’s orientation and position relative to the arm is known.

I am currently working to improve the robustness of the vision system by testing different methods of filtering out noise and more distinctive markings on the pipes. Specifically, I am experimenting with bichromatic markings (i.e. a marking consisting of two colours next to each other). The system should produce false positive marking detections much less often when looking for bichromatic markings instead of monochomatic ones, because it is less likely for an object with the same bichromacity as the marking to appear in the background environment.

Further, I spent some time developing a ROS node to interface with the arm, using the serial interface I mentioned previously. I implemented basic functionality to perform absolute and relative movement of the robot’s joints and end effector. I am now helping another team member extend the node to work with MoveIt!, a kinematics engine.

Next, I expect to be writing a fair bit of code to control the movement of the mobile base, as the hardware is just about in place to allow it to drive. I also expect to do some work with networking once we start implementing teleoperation and, potentially, remote data logging.

Endgame

The project must be completed by March 17th, when it will be presented as a part of the Mechatronics Design Symposium held by the university. It shall be a busy term.